Configure Dialogflow CX using wizard

Define bot details

First define bot details. Then configure the speech-to-text and text-to-speech services.

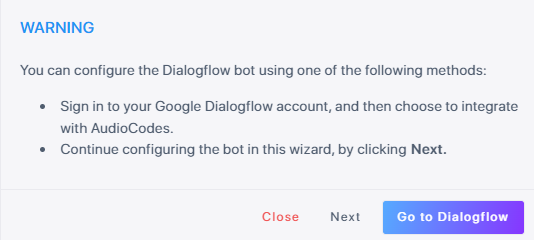

To configure the Dialogflow CX account directly using the wizard:

-

Click Next.

The following appears:

-

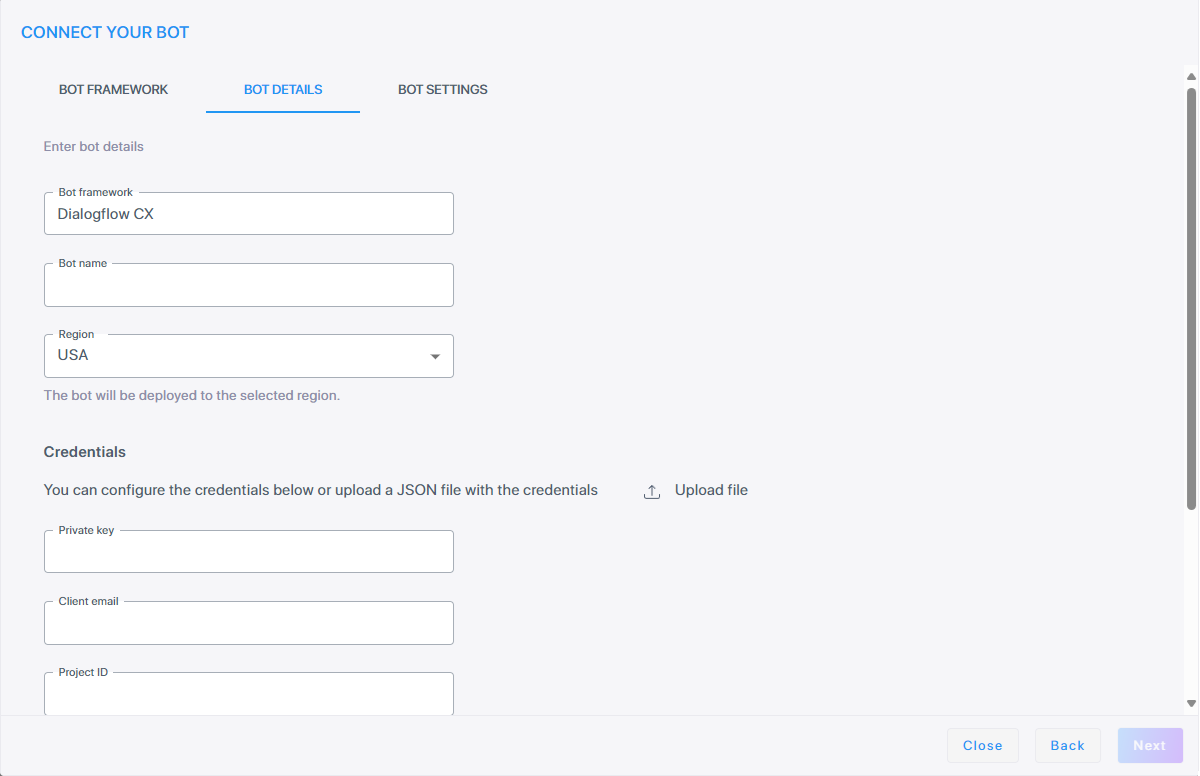

Under the Bot Details tab:

-

The 'Bot framework' field is automatically populated with Dialogflow CX.

-

In the 'Bot connection name' field, enter the name of the bot connection.

-

From the 'Live Hub region' drop down list, select the geographical location (region) in which Live Hub voice infrastructure is deployed.

-

Under the Credentials group, you can configure the credentials below or upload a JSON file with the credentials

by clicking .

. -

In the 'Private Key' field, enter the Private Key field as in the Google speech service.

-

In the 'Client email' field, enter the email address of the client.

-

In the 'Project ID' field, enter the Project ID.

-

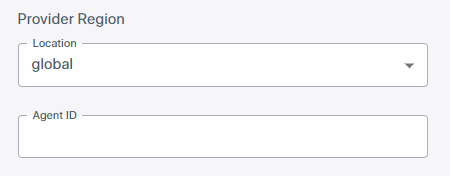

Under 'Provider Region', from the 'Location' drop-down list, select the relevant region. This refers to the region where the provider is located.

-

In the 'Agent ID' field, enter the Agent ID.

-

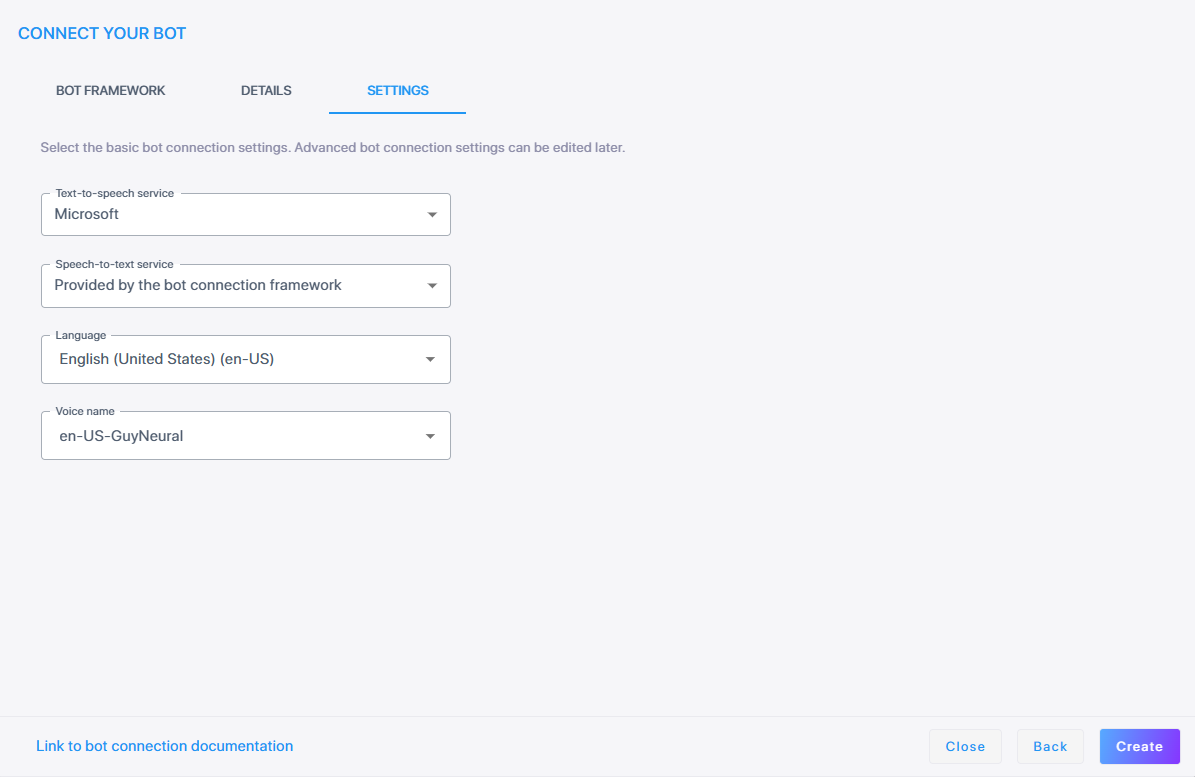

Click Next. The Settings screen displays different configuration options depending on which speech services you select.

-

Proceed to Configure Speech-to-Text service and then Configure Text-to-Speech service.

Configure Speech-to-Text service

Refer to the sections below for instructions on configuring your speech‑to‑text service. When done, continue to Configure Text-to-Speech service.

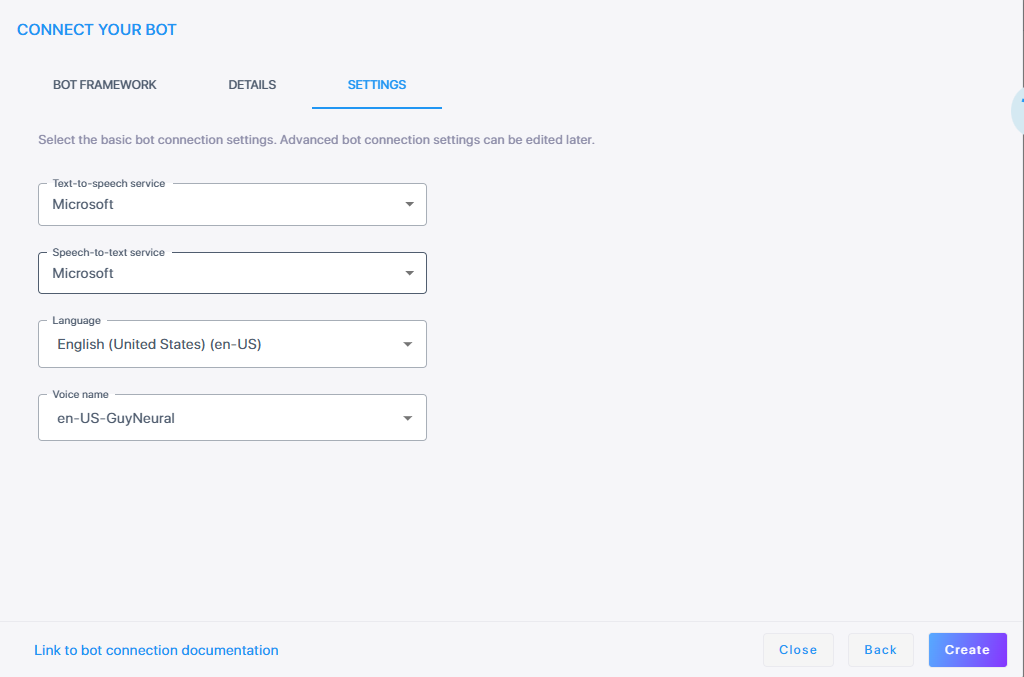

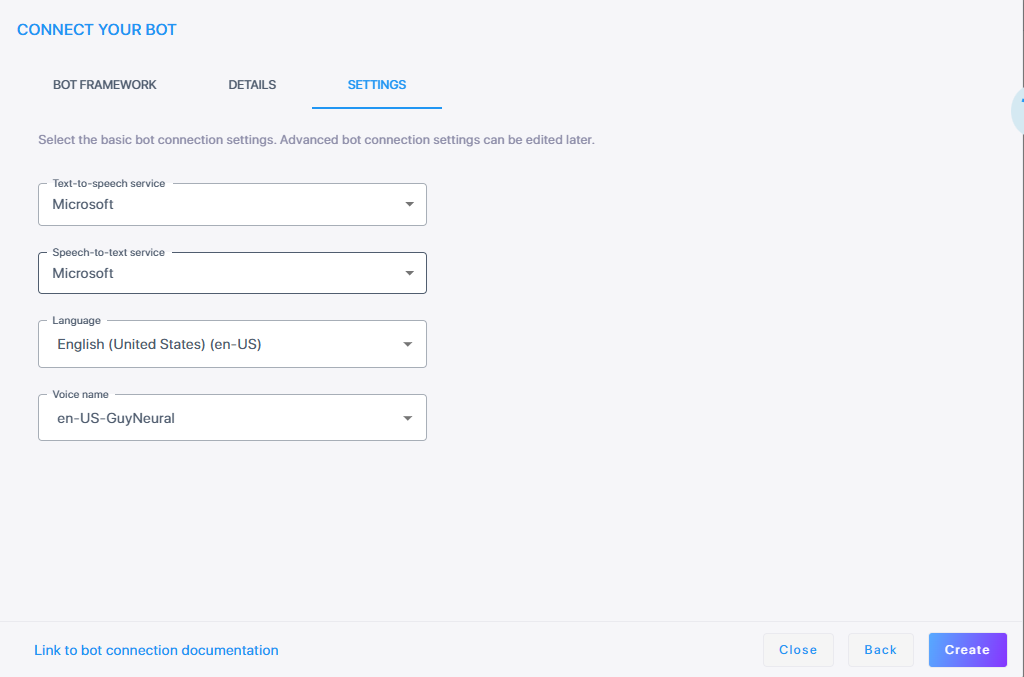

Microsoft

-

From the 'Speech-to-text service' drop-down list, select Microsoft.

-

From the 'Language' drop-down list, select the appropriate language.

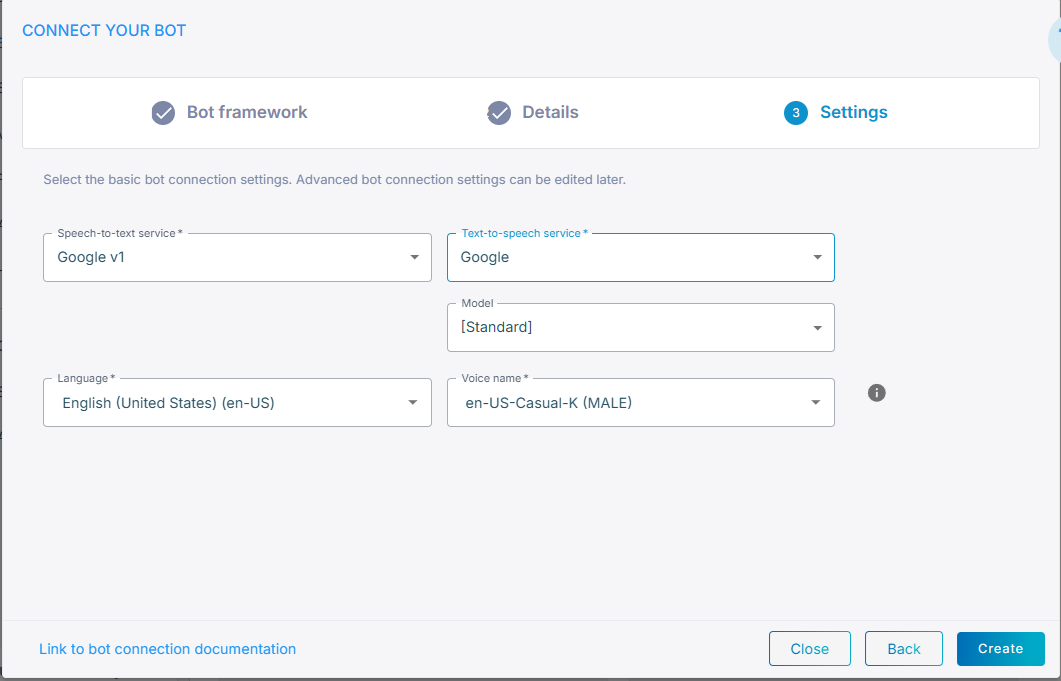

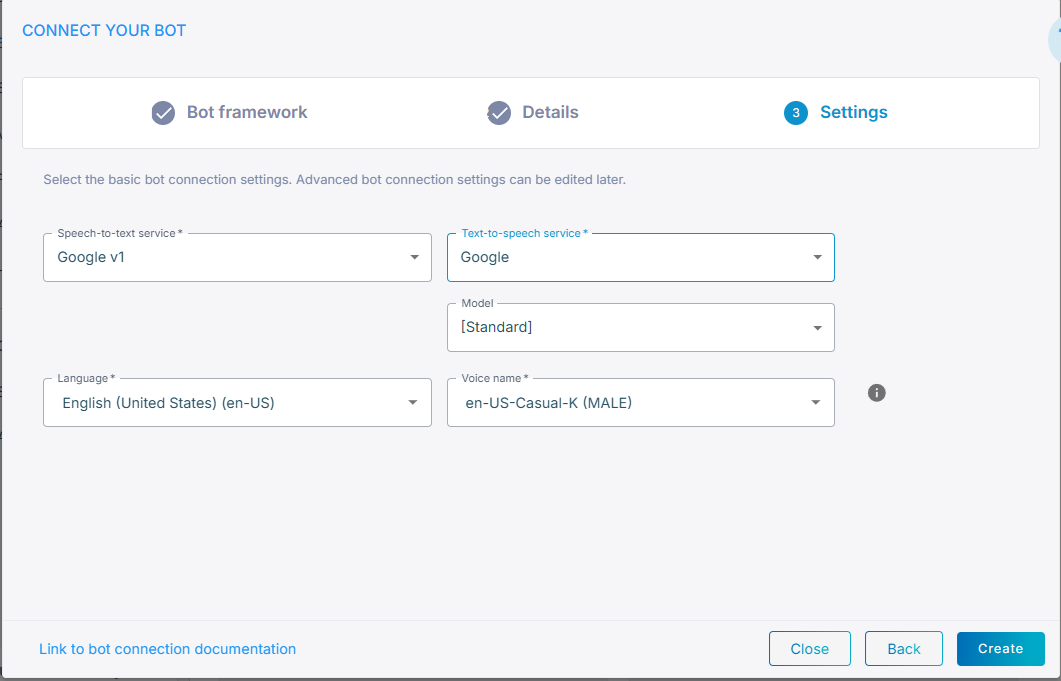

Google V1

-

From the 'Speech-to-text service' drop-down list, select Google V1.

-

From the 'Language' drop-down list, select the appropriate language.

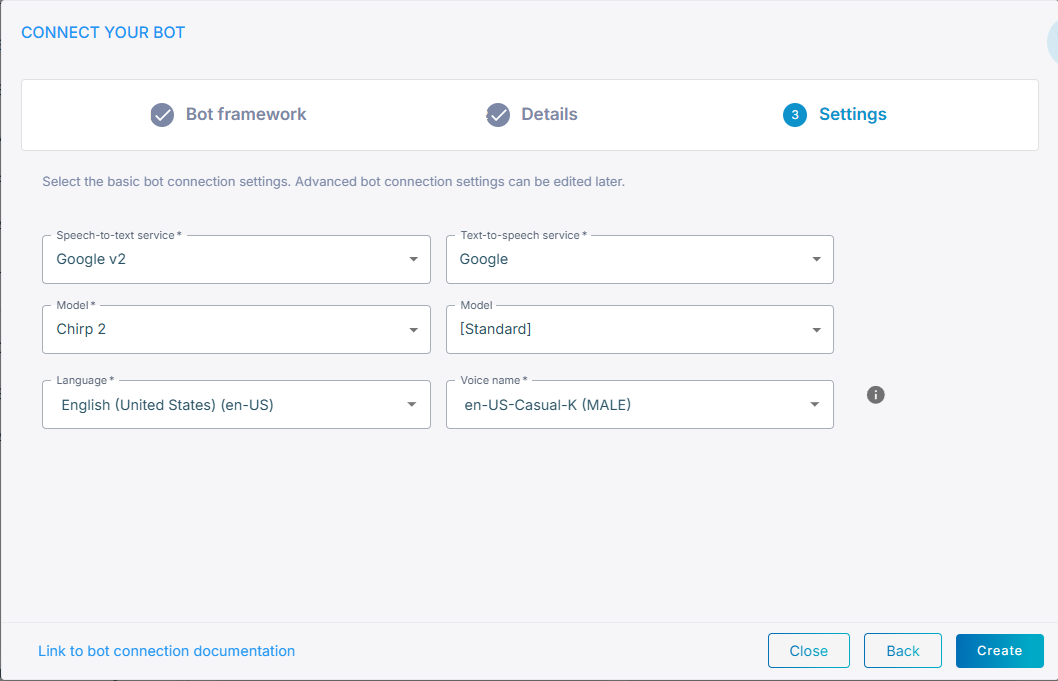

Google V2

-

From the 'Speech-to-text service' drop-down list, select Google V2.

-

From the 'Model' drop-down list, select the speech recognition model (for example, Chirp 2).

-

From the 'Language' drop-down list, select the appropriate language.

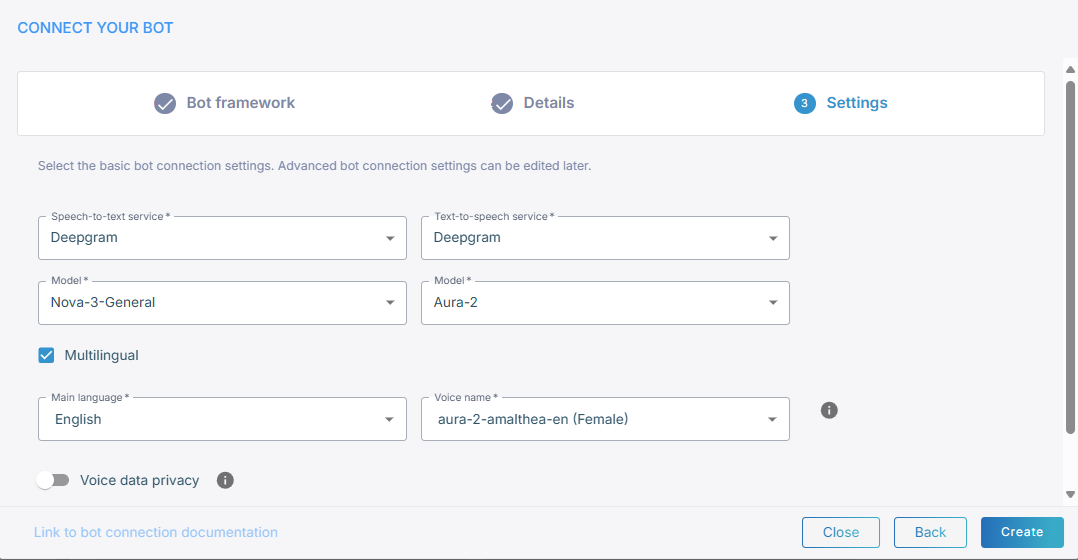

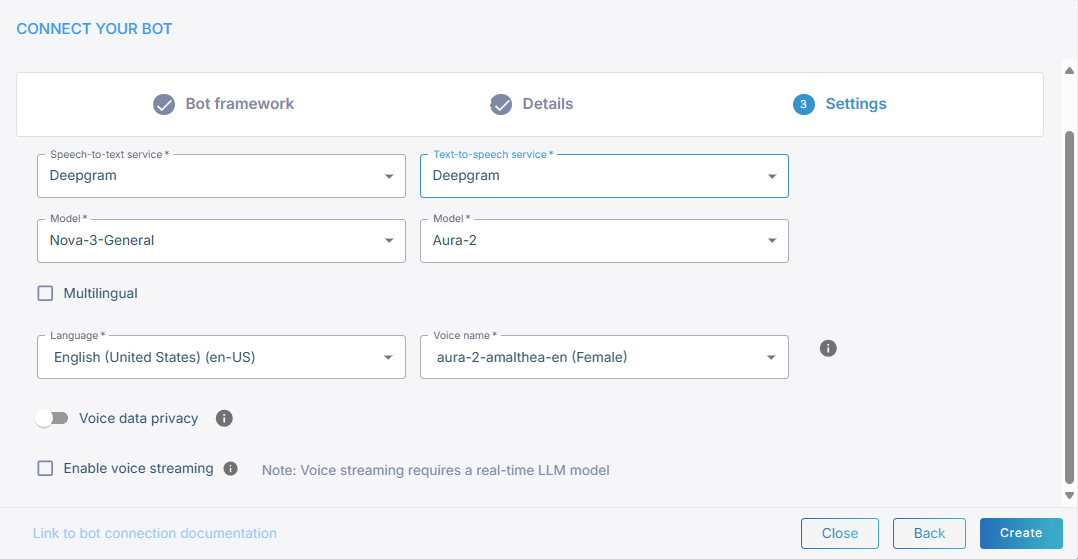

Deepgram

-

From the 'Speech-to-text service' drop-down list, select Deepgram.

-

From the 'Model' drop-down list, select the speech recognition model (for example, Nova-3-General).

Custom

-

From the Text‑to‑speech service drop‑down list, select Custom.

-

Continue with the steps that apply to the speech provider you configured.

Configure Text-to-Speech service

Refer to the sections below for instructions on configuring your text‑to‑speech service. When done, click Create; the new bot connection is created.

Microsoft

-

From the 'Text-to-speech service' drop-down list, select Microsoft.

-

From the 'Voice name' drop-down list, select the appropriate voice name.

-

Click Create; the new bot connection is created.

-

From the 'Text-to-speech service' drop-down list, select Google.

For manual Dialogflow bots, you can select any Speech-to-text and Text-to-speech providers from the drop-down lists including defined speech services.

-

From the 'Model' drop-down list, select the speech recognition model (for example, Gemini 2.5 Pro).

Note: If you experience a TTS timeout, consider increasing ttsConnectionTimeoutMS to a value greater than 5000 to improve stability.

-

From the 'Voice name' drop-down list, select the appropriate voice name.

-

Click Create; the new bot connection is created.

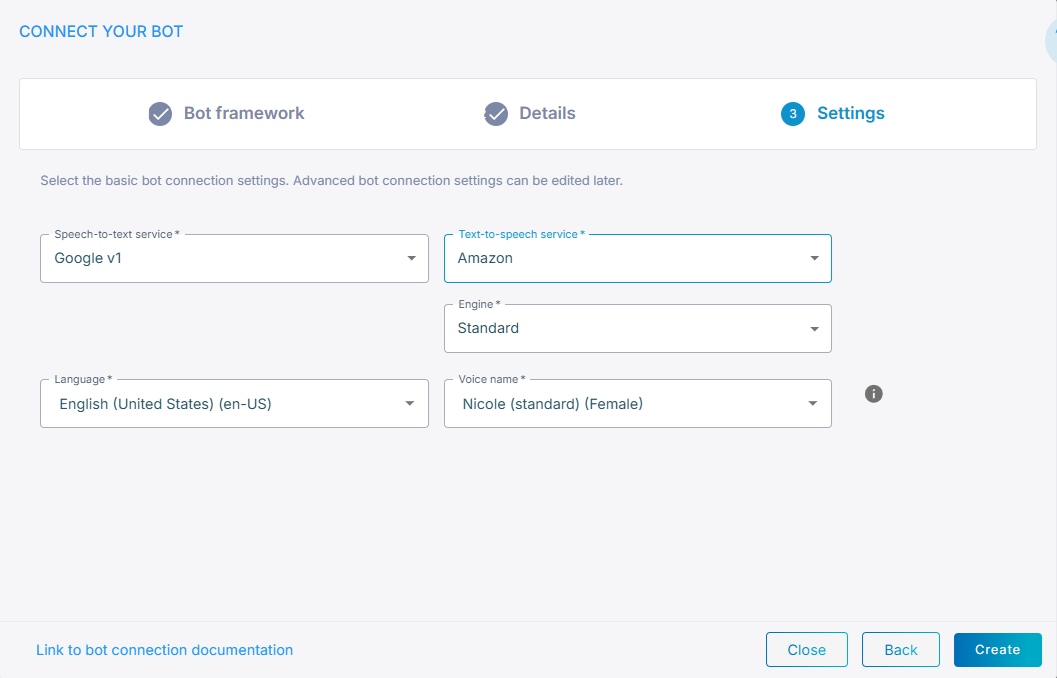

Amazon

-

From the 'Text-to-speech service' drop-down list, select Amazon.

-

From the 'Engine' drop-down list, select the speech recognition engine.

-

From the 'Voice name' drop-down list, select the appropriate voice name.

-

Click Create; the new bot connection is created.

Deepgram

-

From the 'Text-to-speech service' drop-down list, select Deepgram.

For manual Dialogflow bots, you can select any Speech-to-text and Text-to-speech providers from the drop-down lists including defined speech services.

-

From the 'Model' drop-down list, select speech recognition model (for example, Aura-2).

-

Select Multilingual if you need multi-language support. When enabled, a 'Main language' field appears where you can define your main language.

-

If you didn’t select Multilingual, from the 'Language' drop-down list, select the appropriate language.

-

From the 'Voice name' drop-down list, select the appropriate voice name.

-

Toggle Allow Deepgram to use the audio data to improve its models to control whether Deepgram may use your audio data to improve its models. By default, this is enabled. When enabled, Deepgram may use the audio data to support advanced model development in accordance to Deepgram usage terms. Disabling this option will result in higher usage costs.

-

If you want voice streaming, select Enable voice streaming. Selecting this lets Live Hub stream voice directly between the bot and the user, instead of sending text. If you enable voice streaming, the speech-to-text and text-to-speech drop-down fields will no longer be displayed.

-

Voice streaming quires a real-time LLM model.

-

If you enable voice streaming, the speech-to-text and text-to-speech drop-down fields will no longer be displayed.

-

-

Click Create; the new bot connection is created.

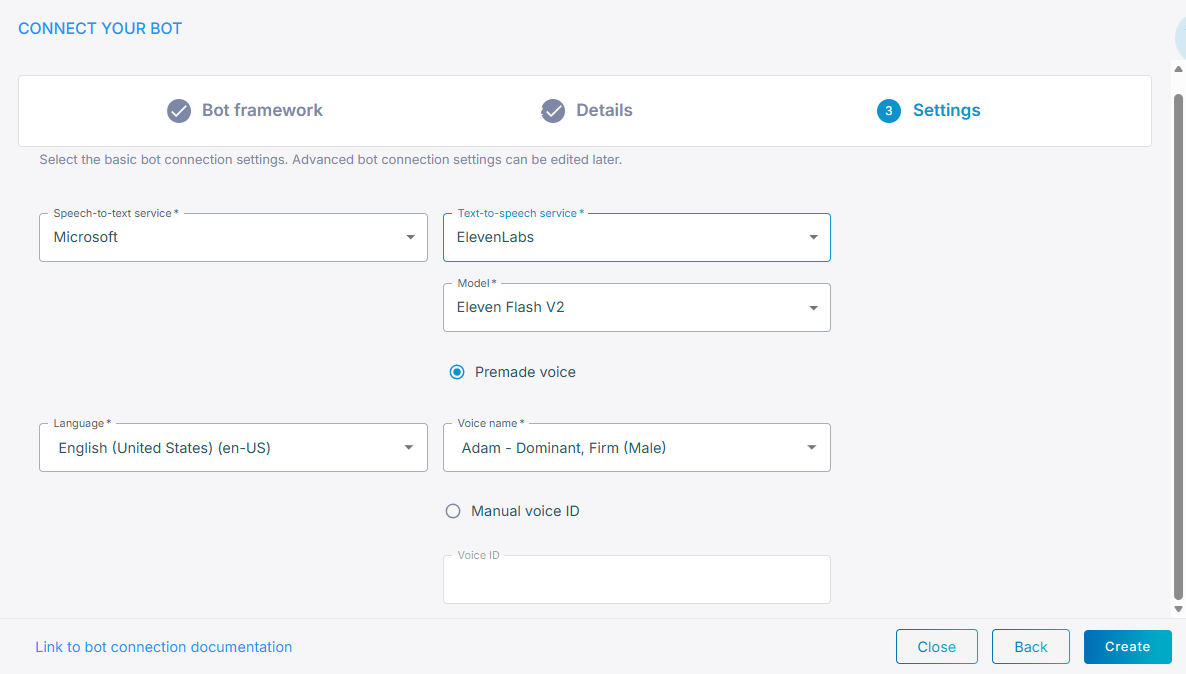

Eleven Labs

-

From the 'Text-to-speech service' drop-down list, select ElevenLabs.

-

From the 'Model' drop-down list, select speech recognition model.

-

Select Premade voice or Manual voice ID.

-

If you selected Premade voice, enter the 'Voice name' that is provided by ElevenLabs.

-

If you selected Manual voice ID, choose the 'Voice ID' that you created.

-

Click Create; the new bot connection is created.

Custom

-

From the Text‑to‑speech service drop‑down list, select Custom.

-

Continue with the steps that apply to the speech provider you configured.

The Integration value is Manual.